TL;DR: There will be no dog hosted podcasts discussed here but please enjoy this adorable image.

I love podcasts and I credit them, those who produce them and their guests for playing a significant role in developing my career and passions. You can skip to the end of this post to check out the podcasts I listen to today, but allow me to pontificate about podcasts and how I like to consume them.

I started listening to podcasts (mostly technical) almost ten years ago. Around that time I got interested in ultra marathons (any run longer than 26.2 miles) and they would keep me company on my monthly 50K runs mostly in the dark through the trails of Chino Hills State Park. Back then I had been developing software professionally for several years and had done some truly cool stuff but mostly in a cave of my own making. I am a self-taught coder and what I knew at the time I had learned mostly from books and my own tinkering. I was not at all "plugged in" to any developer community and the actual human developers I knew were limited to those at my place of work. Podcasts changed all of that.

High level awareness over deep mastery

First things first, if you set aside time to listen to a podcast with the hopes of really learning some deep details about a particular topic, you may be disappointed. This is not to say that podcasts lack rich technical content, they simply are not the medium by which one should expect to gain mastery over a given topic.

Most will agree that technology workers like those likely reading this post are constantly inundated with new technologies, tools, and ideas. Sometimes it can feel like we are constantly making decisions as to what NOT to learn because no human being can possibly set out to study and even gain a novice ability to work with all of this information. So its important that the facts we use to decide where to invest our learning efforts are as well informed as possible.

I like the fact that I can casually listen to several podcasts and build an awareness of concepts that may be useful to me and that I can draw from later at a deeper level. There have now been countless times that I have come across a particular problem and recall something I heard in a podcast that I think may be applicable. At that time I can google the topic and either determine that its not worth pursuing or start to dive in and explore.

So many trends and ideas - you need to be aware

There is so much going on in our space and at such a fast pace. Like I mention above, its simply impossible to grasp everything. Its also impossible to simply follow every trending topic. However we all need to maintain some kind of feed to the greater technical community in order to maintain at least a basic awareness of what is current in our space. Its just too easy to live out our careers in isolation, regardless of how smart we are, and miss out on so many of the great ideas in circulation around us.

When I started listening to podcasts, my awareness and exposure to new ideas took off and allowed me to follow new disciplines that truly stretched me. I may not have gained these awarenesses had I not had this link to the "outside world."

Some of the significant "life changing" ideas that podcasts introduced me to were: Test Driven Development, Inversion of Control patterns and container implementations, several significant Open Source projects but more importantly, a curiosity to become actively involved in open source.

Making a bigger impact

After listening to several podcasts I began to take stock of my career and realize that while I had accomplished to put out some good technology and gain notoriety within my own work place, that notoriety and overall impact did not reach far beyond that relatively small sphere of influence. Listening to podcasts and being exposed to the guests that appeared on them made me recognize the value of "getting out there" and becoming involved with a broader group. This especially hit home when I decided to change jobs after being with the same employer for nine years.

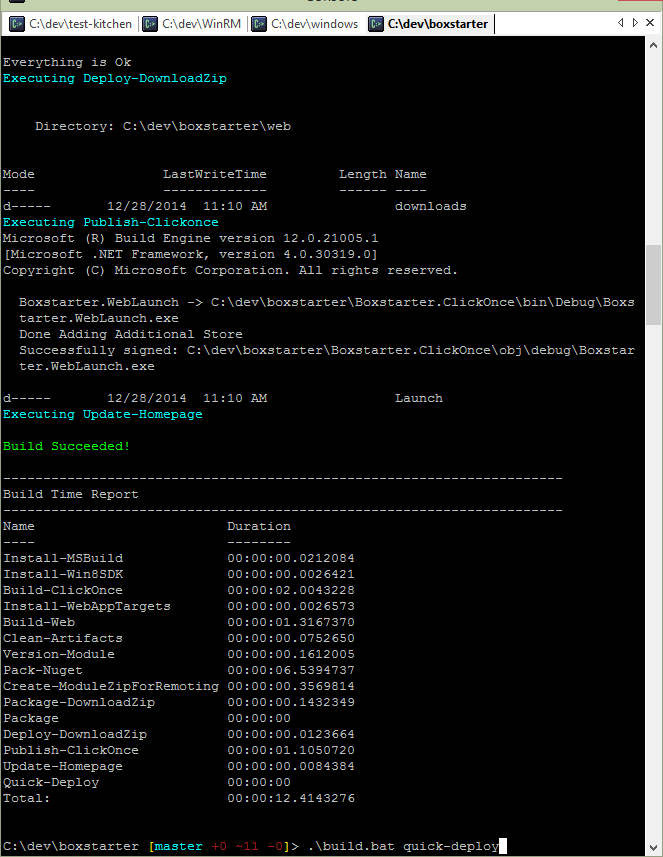

It was in large part thanks to some of the prolific bloggers I heard interviewed that inspired me to start my own blog. I had listened to tons of open source project contributors talk about the projects they started and maintain and I eventually started my own projects. A couple of these got noticed and I have now been invited to speak on a few podcasts myself. That just seems crazy and tends to strongly invoke my deep seated imposter syndrome, but they were all alot of fun.

I even got to work with a podcaster who I enjoyed listening to for years, David Starr (@elegantcoder), and had the privilege of sitting right next to him every day. What a treat and I have to say that the real life David lived up to the episodes I enjoyed on my runs years before. If you want to hear someone super smart, I'm talking about David, have a listen to his interview on Hanselminutes.

Podcasts I listen to

So my tastes and the topics I tend to gravitate towards have changed over the past few years. For instance, I listen to more "devopsy" podcasts and less webdev shows than I used to but I still religiously listen to some of the first podcasts I started with. Some I enjoy more for the host than the topics covered.

Here are the podcasts I subscribe to today in alphabetical order:

.Net Rocks!

This may have been the first series I listened to and I still listen now and again. As the name suggests, its focus is on .net technologies. Carl Franklin and Richard Campbell do a great and very professional job producing this podcast.

Arrested Devops

This is a fairly new podcast focusing on devops topics and usually includes not only the hosts, Matt Stratton, Trevor Hess, and Bridget Kromhout but also one or more great guests knowledgeable of devops topics. You will also learn, and I'll just tell you right now, that there is always devops in the banana stand. I did not know that.

I've had the pleasure of meeting Matt on a few occasions at some Chef events. He's a great guy, fun to talk to and passionate about devops in the windows space.

The Cloudcast

Put on by Aaron Delp and Brian Gracely, I just started listening to this one and so far really like it. I work for a cloud so it seems only natural that listen to such a podcast.

Devops Cafe

Another great podcast focusing on devops topics put on by John Willis and Damon Edwards. The favicon of their website looks like a Minecraft cube. Is there meaning here? I don't know but I like it.

Food Fight Show

Another Devops centered podcast hosted by Nathan Harvey and Brandon Burton. The show often covers topics relevant to the Chef development community. So if you are interested in Chef, I especially recommend this show but its coverage certainly includes much more.

Hanselminutes

Another show that I have been listening to since the beginning of my podcast listening. Its hosted by Scott Hanselman and I think he has a real knack for interviewing other engineers. Many of the shows cover topics relevant to Microsoft topics but in recent years Scott has been focusing on alot on broad, and I think important, social issues and how they intersect with developer communities. Its really good stuff.

Herding Code

A great show that often, but not necesarily always focuses on web based technologies. These guys - Jon Galloway, K. Scott Allen, Kevin Dente, and Scott Koon - ask alot of great questions of their guests and have the ability to dive deep into technical issues.

Ops All the Things

Put on by Steven Murawski and Chris Webber talking about devops related topics. I learned about Steven from his appearances on several other podcasts talking about Microsoft's DSC (Desired State Configuration) and his experiences working with it at Stack Exchange. I've had the privilege of meeting Steven and recently working with him on a working group aimed at bringing Test-Kitchen (an ifrastructure automation testing tool) to Windows.

PowerScripting Podcast

A great show focused on powershell hosted by Jonathan Walz and Hal Rottenberg. If you like or are interested in powershell, you should definitely subscribe to this podcast. They have tons of great guests including at least three episodes with Jeffrey Snover the creator of powershell.

Runas Radio

A weekly interview show with Richard Campbell and an interesting guest focusing on Microsoft IT Professional (Ops) and lately many "devops" related guests and topics.

The Ship Show

Another podcast focused on devops topics hosted by Join J. Paul Reed, Youssuf El-Kalay, EJ Ciramella, Seth Thomas, Sascha Bates , and Pete Cheslock. These episodes often include great discussion both among the hosts and with some great guests.

Software Defined Talk

Another new show in my feed but this one is special. Its hosted by Michael Coté, Matt Ray, and Brandon Whichard. I find these guys very entertaining and informative. The show tends to focus on general market trends in the software industry but there is something about the three of these guys and their personalities that I find really refreshing. I walk away from all of these episodes with a good chuckle and with several tidbits of industry knowledge I didn't have before.

Software Engineering Radio

Here is another show that I have been listening to since the beginning. One thing I like about this series is that it really has no core technical focus and therefore provides a nice range of topics across, "devops", process management, and engineering covering several different disciplines. I highly recommend a recent episode, Gang of Four – 20 Years Later.

This Developers Life

There hasn't been a new episode in over a year and perhaps there never will be another but each of these episodes are a must listen. If you like the popular This American Life podcast, you should really enjoy this series which shamelessly copies the former but focuses on issues core to development. Scott Hanselman and Rob Connery are true creative genius here.

Windows Weekly

It took me a couple episodes to get into this one but I now look forward to it every week. Hosted by Leo Laporte, Mary Jo Foley and Paul Thurrott, it takes a more "end user" view into Microsoft technologies. Now that I no longer work for Microsoft I find it all the more interesting to get some inside scoop on that place where I used to work.

The Goat Farm

I just discovered this and it looks like another good addition to my list. Run by Michael Ducy and Ross Clanton. Just listened to my first episode last night: Taylorism, Hating Agile, and DevOps at CSG.